Earlier this month, Apple announced that it is introducing new child safety features for its entire ecosystem. As a part of this effort, the Cupertino firm is going to scan the contents of iCloud and the Messages app using on-device machine learning to detect Child Sexual Abuse Material (CSAM). Despite clarifications that the app won't be used to violate privacy or be exploited for accessing one's messages and photographs, the announcement drew substantial dissension from the tech world and the public at large. Following the criticism, Apple released a six-page document outlining its modus operandi on combating CSAM with on-device machine learning coupled with an algorithm dubbed NeuralHash.

Apple has further stated that its CSAM-detection module is under development and that it will only scan images that have been flagged as problematic across multiple countries.

Nevertheless, in the latest developments, a curious Reddit user who goes by the tag u/AsuharietYgvar, grokked into Apple's hidden APIs and performed what they believe is a reverse-engineering of the NeuralHash algorithm. Surprisingly, they found that this algorithm existed in the Apple ecosystem as early as iOS 14.3. That might raise some eyebrows since the entire CSAM episode is a relatively recent development, but u/AsuharietYgvar clarified that they have good reason to believe that their findings are legitimate.

Firstly, they discovered that the model's files all have the NeuralHashv3b prefix attached to them. This follows the nomenclature in Apple's six-pager. Secondly, they noticed that uncovered source code used the same procedure for synthesizing hashes as the one that was outlined in Apple's document. Thirdly, Apple claims that their hashing scheme creates hashes nearly independent of the resizing and compression of the image, which is what the Reddit user found in the source code as well, further cementing their belief that they had indeed uncovered NeuralHash hidden deep in the source code.

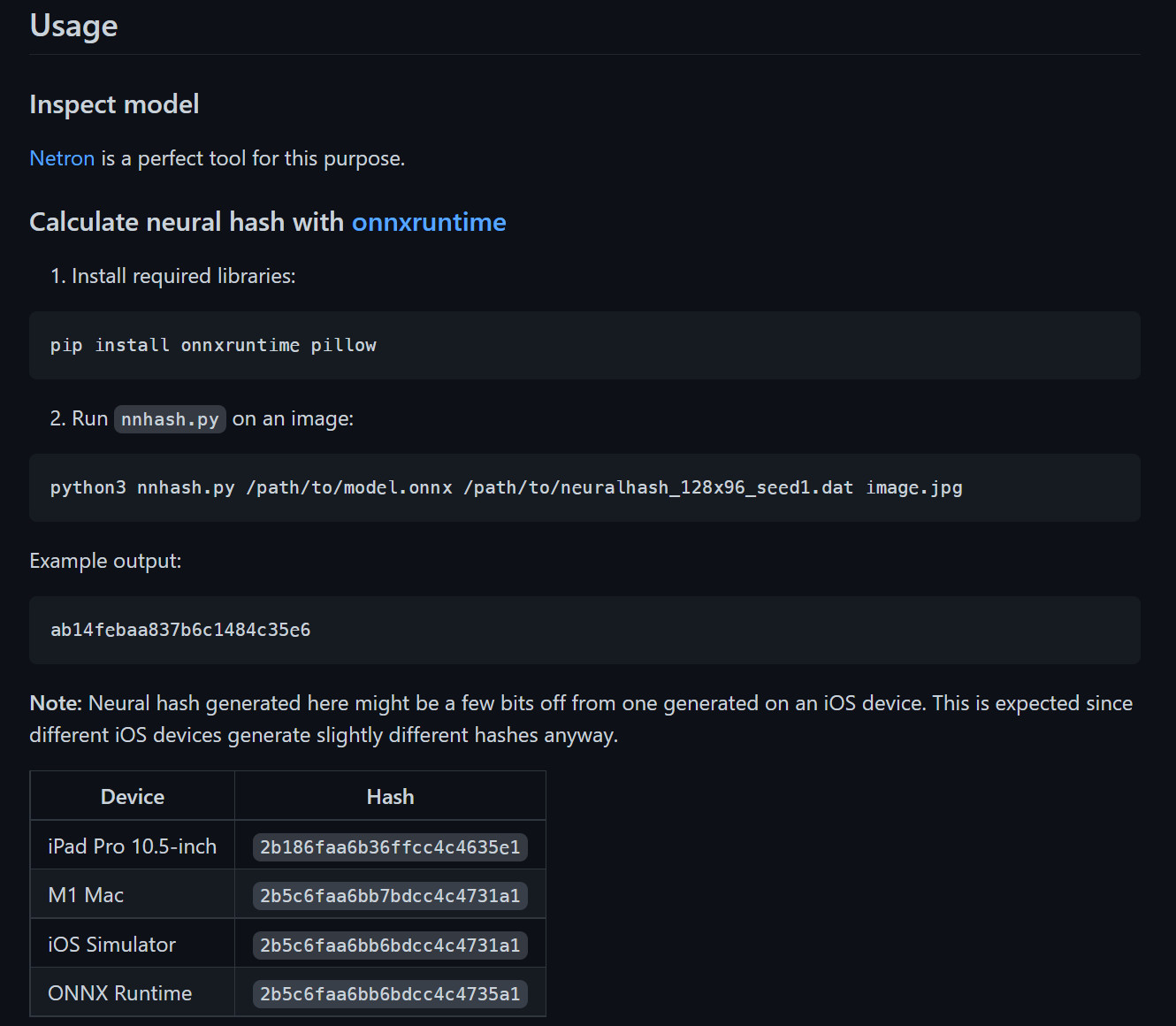

The Reddit user has released their findings on this GitHub repository. While they did not publicize the exported model's files, they did outline a procedure for extracting the model and converting it to a deployable ONNX runtime format yourself. After exporting the model, they test-ran its inference and gave it a sample image against which the hashes were generated.

As can be seen in the screenshot above, they've provided hashes for the same image on different devices. The hashes are the same across the devices, barring a few bits, which is expected behavior, according to the Reddit user, as NeuralHash would work with floating-point calculations whose accuracy depends heavily on the hardware. It is likely that Apple will accommodate these off-by-a-few-bits differences in their subsequent database-matching algorithm, they added.

The Reddit user believes that this is a good time to poke into the workings of NeuralHash and its corollaries for user privacy. If you are interested in checking it out and exploring the details further, you may refer to this GitHub repository. Prerequisites, compilation, and usage steps are detailed therein.

Article From & Read More ( Reddit user reverse-engineers what they believe is Apple's solution to flag child sex abuse - Neowin )https://ift.tt/3k1ZLUh

Technology

Bagikan Berita Ini

0 Response to "Reddit user reverse-engineers what they believe is Apple's solution to flag child sex abuse - Neowin"

Post a Comment